A New Rendering API Arises: WebGPU!

WebGPU is a new API that, unlike WebGL, is not a OpenGL wrapper. Instead, it is based on native APIs like Vulkan, Metal and DirectX12. It was first published in 2021 and currently available via Chrome Canary.

Some useful documentations:

Github link for this tutorialWhile WebGPU has "Web" in its name, one may think that it is only for browsers, but there are many bindings for WebGPU like for Rust and C++. Today, we'll keep it simple and see how we can render a colorful square on a HTML Canvas using TypeScript and Vite.

Project Set-Up

Since WebGPU has a lot of interfaces, we'll be using TypeScript to save us the trouble. I highly recommend using vite, though webpack can also be used. Set up vanilla TypeScript project using Vite, then delete the default cube boilerplate code. Now we should install WebGPU types.

npm i @webgpu/types With this, we've installed the types but we must specify that TypeScript should check for these types. Open "tsconfig.json" file, and in "compilerOptions", add "@webgpu/types" to "types" array. This tells TypeScript to check for these additional types we installed through npm.

Code

In this API, we'll have to make many asynchronous calls. Due to this, in the main file, let's create an async function.

async function main(){

//-----------------Init-----------------//

if(!navigator.gpu) throw new Error('WebGPU not supported');

//canvas element to display the render on

const canvas = document.querySelector('canvas') as HTMLCanvasElement;

if(!canvas) throw new Error('Canvas not found');

}

main();

In first line, !navigator.gpu checks whether if current GPU can run WebGPU or not. If it cannot, it is ideal to create a fallback on WebGL. Again, we need a canvas element to display the render on. Keep in mind that canvas can take "width" and "height" attributes to determine canvas' size.

//...main

//gpu adapter to get the gpu device

const gpuAdapter = await navigator.gpu.requestAdapter({

powerPreference: 'high-performance' //or try 'low-power'

});

if(!gpuAdapter) throw new Error('GPU Adapter not found');

//get the gpu device

const gpuDevice = await gpuAdapter.requestDevice();

if(!gpuDevice) throw new Error('GPU Device not found');

}

main();

Continuing with the main function, we need to get GPU Adapter in order to get the actual GPU Device. GPU Adapter is like a permission to get GPU Device. We can also set the power preference for the adapter. If high-performance is selected, requestDevice() will return us the dedicated graphics card, if it exists. Low-power will return integrated graphics card. However, it is possible that high-performance will return iGPU. This depends on browser and settings of the machine that browser runs on.

//...main

const gpuContext = canvas.getContext('webgpu');

if(!gpuContext) throw new Error('GPU Context not found');

gpuContext.configure({

device: gpuDevice,

format: 'bgra8unorm',

});

}

main();

There are multiple canvas context types, for our case, we must get the WebGPU context. Then, we must configure the context using the GPU Device and format. Format is the texture format that we'll use for WebGPU.

Before continuing with the TypeScript code, let's take a look at WebGPU Shading Language (WGSL). Unlike regular languages like JavaScript, shading languages are run on GPU. This is because compared to CPUs, GPUs are way faster and by putting heavy work like shading stages on GPU, we can save a lot of time and render will be much faster. There are several shading stages, but we only need to care about vertex and fragment stages now. Create new shader files named "color.vert.wgsl" and "color.frag.wgsl".

struct Output {

@builtin(position) Position : vec4<f32>,

@location(0) vColor : vec4<f32>,

};

@vertex

fn main(@location(0) pos: vec4<f32>, @location(1) color: vec4<f32>) -> Output {

var output: Output;

output.Position = pos;

output.vColor = color;

return output;

};

This is the vertex shader code. Lets take a look at the main function first.

- fn means function. Functions can take parameters, and return values. It is similar to statically typed languages, so we must specify return types, parameter types, etc.

- @vertex means that this function will run on Vertex stage.

- struct similar to C structs, it can hold variables.

- @builtin required return type. By default vertex stage must return position of vertices.

- location buffer locations. We can take position and color data from buffers using these. It is similar to layouts of GLSL.

Basically, this shader takes position and color data from buffers and passes color data to fragment shader.

fn main(@location(0) vColor: vec4<f32>) -> @location(0) vec4<f32> {

return vColor;

};

This is the fragment shader code that runs on every pixel. This basically takes color data from vertex stage performs a linear interpolation between vertex colors, which results in a colorful render.

Now that we've seen shader codes, we can import them to our main.ts file. If we try to do it regular way, we'll get an error! Thanks to the vite though, we can import files in raw format via adding "?raw" to end of file path. After that, we now need vertices to pass to vertex shader. Let's create a vertex data for square. Create a new typescript file and import to main.ts:

const vertices = new Float32Array([

//X Y Z R G B

0.0, 0.75, 0.0, 1.0, 0.0, 0.0,

0.75, 0.0, 0.0, 0.0, 1.0, 0.0,

0.0, -0.75, 0.0, 0.0, 0.0, 1.0,

-0.75, 0.0, 0.0, 1.0, 1.0, 1.0,

]);

const indices = new Uint32Array([

0, 1, 2,

2, 3, 0,

]);

export { vertices, indices}

In WebGPU, top left corner of screen is (-1,-1), while bottom right is (1,1). In this code, all vertices have 6 floats assigned to them. First 3 are position data, last 3 are color data. But what are indices?

Indices are the order in which triangles are rendered. All objects are actually rendered in triangles, so we must have a way to give GPU a certain order so triangles will be rendered in correct order. In short, the first triangle will be rendered with vertices 0, 1, 2 and second triangle will be rendered with vertices 2, 3 and 0. After this, we can return to our main function.

//...main

//---------------Pipeline---------------//

const gpuPipeline = gpuDevice.createRenderPipeline({

layout:"auto",

vertex:{

module: gpuDevice.createShaderModule({

code: vertCode

}),

entryPoint: 'main',

buffers: [

{

arrayStride: (3+3) * 4, //3 floats for position and 3 floats for color per vertex. 4 bytes per float32, that makes up for 24 bytes per vertex

attributes: [

{

shaderLocation: 0,

offset: 0,

format: 'float32x3'

},

{

shaderLocation: 1,

offset: 3 * 4, //3 floats * 4 bytes per float32, basically the offset for the color attribute

format: 'float32x3'

}

]

}

]

},

fragment:{

module: gpuDevice.createShaderModule({

code: fragCode

}),

entryPoint: 'main',

targets:[

{

format: 'bgra8unorm'

}

],

},

primitive:{

topology: 'triangle-list', //also theres triangle-strip, point-list, line-list, line-strip. Try them all!

}

});

In order to communicate with the GPU, we need a pipeline to do it. It is also where we connect our shaders. Our pipeline will take layout, vertex, fragment and primitive properties. In vertex property, it takes a shader module, entrypoint and buffers. Module is essentially the shader code we've created. Entrypoint is the function name of vertex stage. Remember, we've named that function "main". Fragment property will take a target format, which is the canvas format we specified: 'bgra8unorm'.

//...main

const gpuVertexBuffer = gpuDevice.createBuffer({

size: vertices.byteLength,

usage: GPUBufferUsage.VERTEX | GPUBufferUsage.COPY_DST

});

gpuDevice.queue.writeBuffer(gpuVertexBuffer, 0, vertices);

const gpuIndexBuffer = gpuDevice.createBuffer({

size: indices.byteLength,

usage: GPUBufferUsage.INDEX | GPUBufferUsage.COPY_DST

});

gpuDevice.queue.writeBuffer(gpuIndexBuffer, 0, indices);

Now, we must pass our data to the GPU, which are vertices and indices.

//...main

//-------------Render Pass--------------//

const gpuCommandEncoder = gpuDevice.createCommandEncoder();

const gpuRenderPass = gpuCommandEncoder.beginRenderPass({

colorAttachments:[

{

view: gpuContext.getCurrentTexture().createView(),

clearValue: {r: 0.03, g: 0.07, b: 0.15, a: 1.0},

loadOp: 'clear',

storeOp: 'store'

}

]

});

gpuRenderPass.setPipeline(gpuPipeline);

gpuRenderPass.setVertexBuffer(0, gpuVertexBuffer);

gpuRenderPass.setIndexBuffer(gpuIndexBuffer, 'uint32');

gpuRenderPass.drawIndexed(indices.length, 1, 0, 0, 0);

gpuRenderPass.end();

gpuDevice.queue.submit([gpuCommandEncoder.finish()]);

}

main();

Now we've set up everything, time to connect them all together! A command encoder is how we pass calls to the GPU. With command encoder, we can create a Render Pass Encoder. It takes color attachments array, which has view and clearValue. A view is basically the HTML canvas screen, while clearValue is the background color of the view. Back to the render pass, we need to set pipeline, vertex buffer and index buffers we created for it. We call the drawIndexed function and end the command. After ending the command, we must submit this command to the GPU.

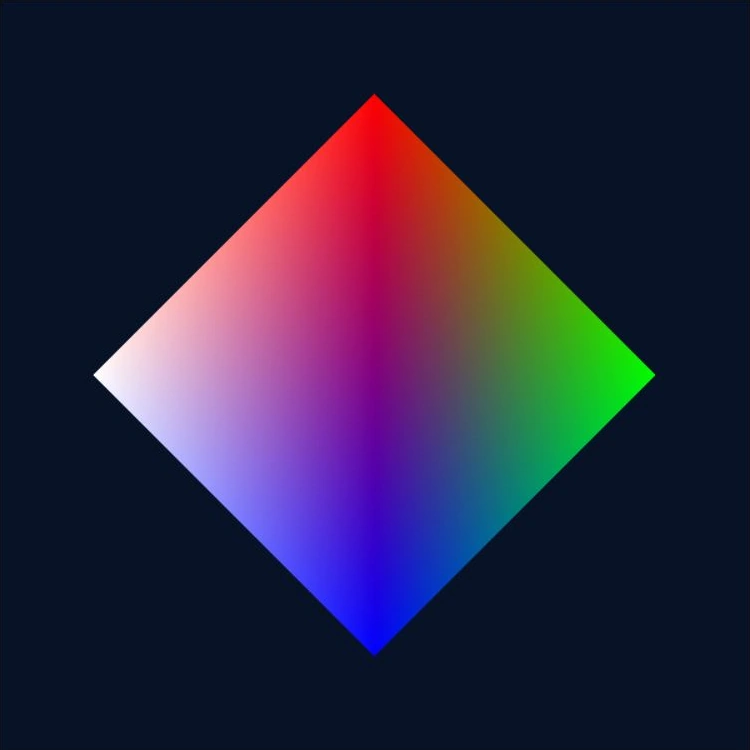

After refleshing the page, we see a fancy looking square!

In the end, WebGPU is pretty similar to Vulkan, but good thing is that we don't actually need +1000 lines of code just to get a triangle on screen 😛